last week in AI #2: agents hit adolescence

agents gold rush, trip down memory lane, to benchmark or buy a yacht?

Hi, it’s Maya,

I have been “blessed” with regular migraines, an experience that I don’t recommend to anyone. The list of desperate attempts to get rid of them includes: pills, massages, acupuncture, slapping myself with a sack of frozen beans, chakra healing with alignment and, lately, AI agent - Manus. I was granted early access to it and the first task I gave it was:

“research migraine trials and make fancy charts for the most promising ones”

not everyone gets a seat at the table

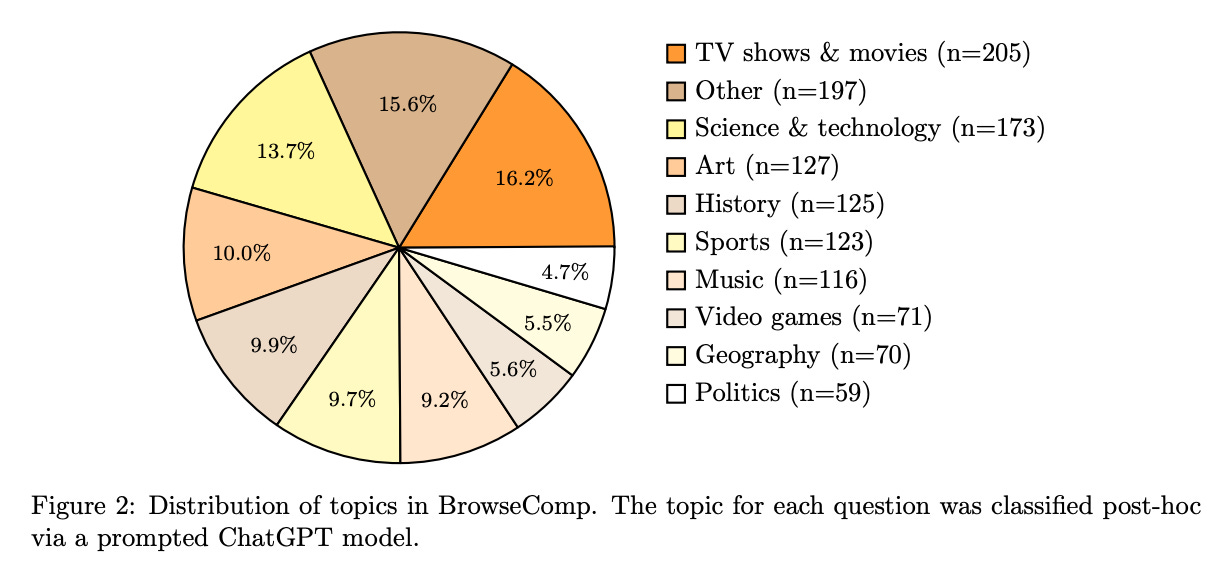

Alas, Manus broke my heart. None of the trials that it listed were useful while my own quick 5-minute research yielded at least a few breakthrough drugs. When OpenAI released BrowseComp last week, a benchmark that measures how good models are at browsing the internet, I finally got the answer to why Manus failed me. It turns out, when you give a model a truly challenging task of finding a “difficult-to-find” information on the internet you get the following results:

GPT‑4o model’s accuracy is sad 0.6%

But with browsing enabled, GPT‑4o was successful only 1.9% of the time. It’s enough to prove the theory that having access to the internet isn’t enough without reasoning.

OpenAI’s o1, a reasoning model, scored 9.9%

Deep Research, a model trained to browse the internet, scored an accuracy of 51.5%

It might sound like even the best model is accurate “only” half of the time, but take a look at one of the questions that are a part of the benchmark:

I am searching for the pseudonym of a writer and biographer who authored numerous books, including their autobiography. In 1980, they also wrote a biography of their father. The writer fell in love with the brother of a philosopher who was the eighth child in their family. The writer was divorced and remarried in the 1940s.

Do you think that you would be able to answer this? Apparently, only around 30% of the questions were solved by humans. Which means that DeepResearch, being able to find difficult information half of the time, is better than humans who are able to do the same only one third of the time. So no wonder that Manus, an agent-wrapper around multiple foundation models, primarily Claude 3.5/3.7 failed to cure my migraines, considering its likely reasoning capabilities fall closer to models benchmarked in the o1 class (around 10% accuracy). Speaking of benchmarks, evaluating reasoning model o1 across several benchmarks cost more than a fine, mid-size yacht (around 2.5 mil).

The high cost of evaluating reasoning models, the stark difference between them and basic model capabilities shown in BrowseComp – all point to the same thing. Only a few big players can afford this AI race, so let’s see what they’re focusing on in order to win, starting with OpenAI.

big changes, “tiny” reasons

Between the new benchmark and the announced release of new open-weights model, OpenAI seems like a different, slightly more open, more likable company. This big change in OpenAI’s focus might be a result of the most unusual suspect - a tiny baby. Sam has admitted that becoming a father has changed him as a person. He even paraphrased Ilya:

“I don’t know what the meaning of life is, but I’m sure it has something to do with babies”.

But let’s not get carried away here. OpenAI might have made a few steps to get closer to its research origins, but it’s still pretty much a mysterious black box and we, the mere mortals only get an occasional hint at what’s going on behind the opaque, closed curtains. Like a hint from Verge that OpenAI’s getting ready to release GPT 4.1, a revamped version of OpenAI’s GPT-4o multimodal model. Smaller mini and nano versions will be released in parallel. So far, OpenAI released GPT 4.5, 4, 4o models, and I’m already struggling to remember which one does what. If they really name their latest model - GPT 4.1, I’m completely giving up.

Another release I look forward to was announced by OpenAI’s CFO Sarah Friar. She confirmed that OpenAI’s working on Agentic Software Engineer; an agent that can build apps, handle pull requests, conduct QA, fix bugs, and write documentation. The type of talk that Sarah gave is becoming very usual, although it causes an unusual level of anxiety. Like an indecisive teenager that flirts with both “the guy” and “the guy”’s best friend, Sarah flirts with the idea of “augmenting” software developers while simultaneously building systems that can/will REPLACE them. And to make things even worse, on the list of professionals that might be replaced soon, we might find personal assistants/therapist thanks to OpenAI’s updated memory feature.

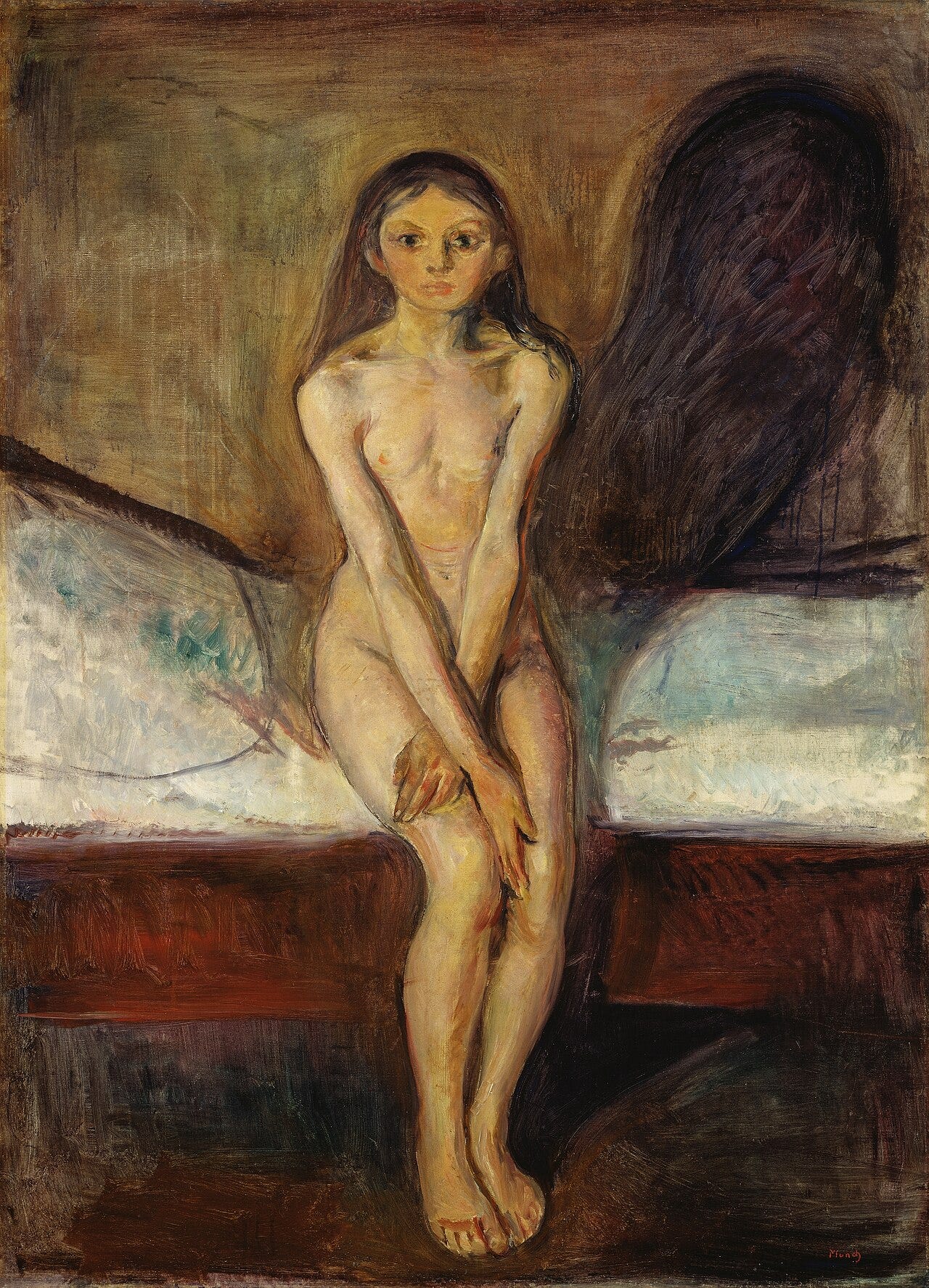

Now don’t ask me to explain why I pestered ChatGPT 4o to remove one of my legs and make me one-legged in the photos. It was an unfortunate phase that I successfully forgot about. But it seems like ChatGPT forgets nothing after the memory feature got introduced. Paying users can have ChatGPT “Reference saved memories” and “Reference chat history” — two options you can opt out of. But if you don’t, ChatGPT practically remembers all of your conversations thanks to seemingly “infinite” memory, which is why when I asked it to “describe me as a person based on all of our previous conversations”, it told me that I’m “Maya: The One-Legged Visionary With an Aesthetic Agenda”. Ouch. Feature sounds like a big deal, a truly knowledgeable personal assistant that knows even most embarrassing intimate parts about me, like my one-legged phase. But a lot of the reactions have been:

“Thanks, I hate it.” or

“I wanted the opposite, a way to make it forget what I just said when I'm trying to generate different styles of images without having to open different conversations.”

To be fair, Anthropic and Google already integrated memory into their chatbots. Sam Altman makes it sound like ChatGPT already had some sort of memory, it just got updated last week. Which proves the point: everyone is turning chatbots into personal assistants.

code not vibing. yet.

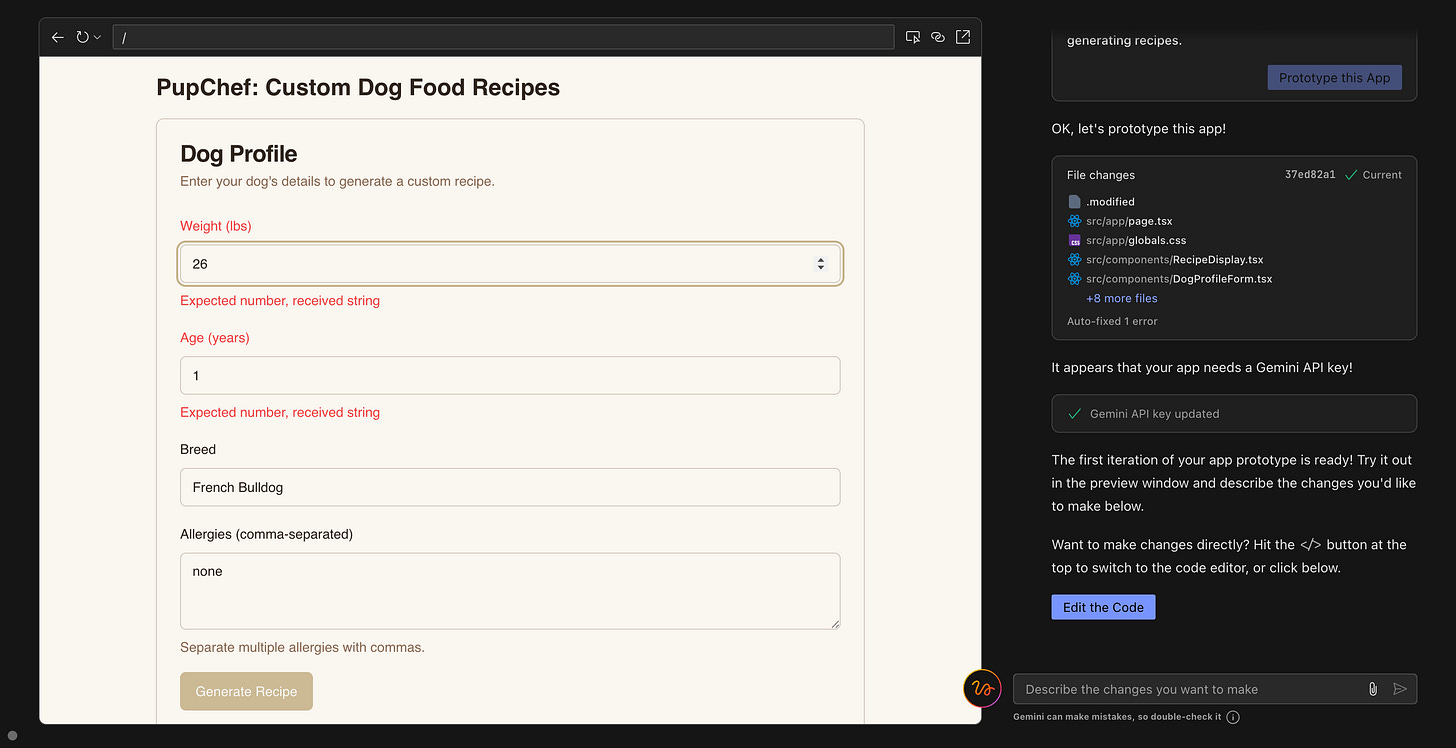

Speaking of assistants… Google has released one for vibe coding called Firebase Studio. Basically, it takes your prompt and creates a prototype of an app. When I gave it a simple prompt “build an app that generates recipes for dogs based on age, breed and weight” I got a clean, sleek mockup of an app within minutes. But behind the pleasant appearance of “PupChef”, things were less functional than families vacationing in White Lotus. None of the buttons were working and every time I asked it to fix element A, it would break element B. Firebase Studio isn’t built on top of the amazing Gemini 2.5 Pro, but a less intelligent, older Gemini 2.0 model. This might be a reason why it flopped so spectacularly. It was crucified as “unusable” and “a total mess” by an army of vibe coders on Reddit.

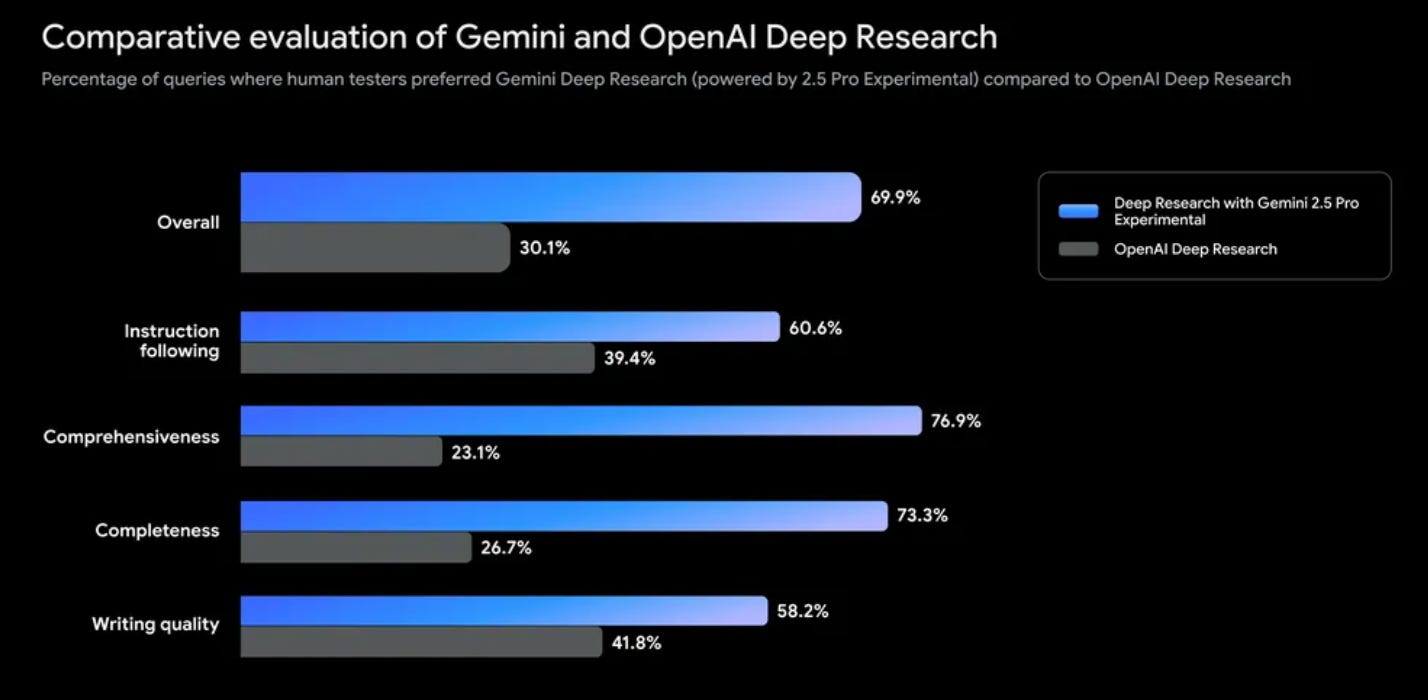

Luckily, Google birthed other, more fortunate things this week. Their Deep Research is now available on Gemini 2.5 Pro Experimental – perhaps today's leading model. Google suggests, based on their own evaluations, that users prefer their version over OpenAI's. I use both platforms. While Gemini's 2.5 Deep Research can initially overwhelm me with an intense flurry of questions – same way my frenchie overwhelms me with barks around lunchtime – I still prefer its “final report” compared to OpenAI's feature.

Firebase Studio, Deep Research, Manus, ChatGPT’s memory feature, what do all of these have in common? Well, they’re all some sort of a personalized, specialized agent; a holy grail of our times. While everyone’s trying to build it first, Google doing something else in addition. It’s trying to be the first to build infrastructure for them. This week they’ve launched Agent Development Kit (ADK), an open-source framework aimed at simplifying the creation of agents, together with Agent2Agent (A2A) protocol, a protocol that aims to make it possible for them to collaborate. In a classic “in a gold rush, sell shovels” move, Google is creating an ecosystem that will make everyone working with agents depend on them someday.

final thoughts

While Google preps shovels for selling and OpenAI preps models with mind-bending capabilities (but confusing names), I still have to rely on myself to cure migraines. This was another “ordinary” week in AI, full of small but wonderful breakthroughs. Of course, with a dash of mandatory backstabbing among the AI giants in order to win our attention. See you next week.