Last week in AI #1

Llama 4 divides, OpenAI shines and Anthropic introduces new phobias

Hey, it’s Maya.

Spring has arrived and I feel like experimenting with new stuff.

I’m starting this once-a-week thing (I will not call it a newsletter, you will not make me do it). Anyways, here’s a conversation that happened entirely in my head about the most important events last week in AI.

Estimated reading time: 4 minutes, 18 seconds (for real)

So, any exciting models released this week?

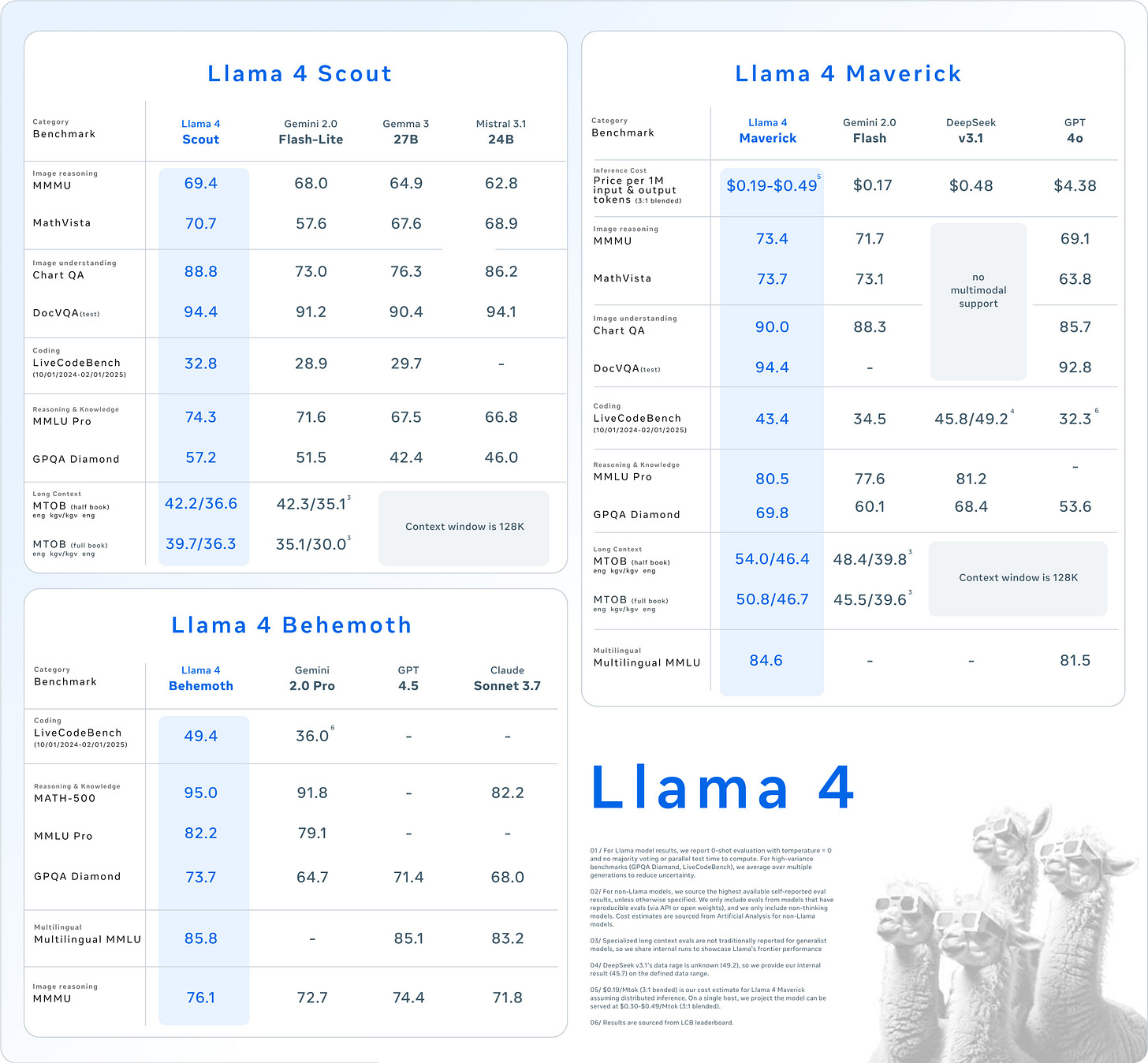

well, I guess not really, although there were some attempts. For example, Meta released a family herd of models called Llama 4. They chose a rather unusual day (Saturday! April 6th, their employees must have *loved* it) to release 2 multimodal models with mixture-of-experts (MoE) architecture. With features like a 10-million-token context window, open-sourcing, and high benchmark scores, Zuck really tried to impress us. And he did succeed, but for all the wrong reasons. The model is more notable for the controversies surrounding its release. Following multiple delays due to performance issues, research team member Licheng Yu claimed on the forum 1point3acres (and on reddit) that he resigned because Meta leadership resorted to unethical benchmark cheating – specifically, training models on test data to inflate scores. Yu's claimed that "Meta's VP of AI also resigned for this reason". Indeed, Joelle Pineau, VP AI Research at Meta, did resign just days earlier. Meta’s lead Gen AI researcher said that the rumors aren’t true and that they “would never do that”.

I mean, fine. It’s impossible to confirm that the account making these claims actually belongs to Yu. Any random person (with a sufficient amount of free time) could post these rumors online. So what really matters is: Do people like these two models? Um, well, most of them seem disappointed. And there's little excitement for the family's largest model, Behemoth with 2 trillion parameters, currently in preview and scheduled for release later this month.

That’s it? No exciting models on the horizon?

It looks like we might have to wait a few weeks for the next big thing. Sam Altman caused a lot of hype by tweeting that ChatGPT-5 is coming "in a few months." This model, probably the most anticipated piece of software ever, will unite all capabilities (code interpreter, image generation etc) into a single system that can intelligently decide which tool or mode to use based on user needs. Since "a few months" feels like an eternity in AI time, OpenAI plans to release "o3" and "o4-mini" – likely lighter or specialized GPT-4 variants – in "a couple of weeks" to keep us entertained.

Wow! Sam Altman must be feeling good right now?

Indeed, this was a good week to be Sam Altman. He got a chance to be the bearer of a lot of good news. First, he teased about releasing a “powerful”, “open-weight” and “reasoning” model soon. Other than those 3 keywords, model is completely shrouded in mystery, but hey, everyone’s excited. After all, this will be OpenAI’s first release of an open-weight model since GPT-2 in 2019.

Last Monday, after seeing my X feed flooded with ghiblified photos, I developed a suspicion that the latest chatgpt image generation update must be somehow available even without a subscription. My suspicions turned out to be true because Sam announced that the update is indeed free to all, including non-paying users. Well, sort of. Users with free accounts get to generate 3 images a day, unlike paid subscribers. Oh, and Altman has inspired some journalists to write books about him. I really look forward to some gossip about “OpenAI board vs Altman drama” in Keach Hagey’s book.

Sam Altman is living la Dolce Vita, it seems…

Yep! Wait till you hear that Sam secured $40 billion in a funding round for OpenAI – the largest private tech deal in history. $300 billion. That’s the value of OpenAI right now 😶 They’ll probably pour all of that money into AGI, right?… Wrong! It looks like Sam Altman set his AI on something like AI-powered personal devices. There have been rumors about “phone” without a screen (?!) and AI microwaves. Which is why it’s not surprising that OpenAI is in discussions to buy a startup of ex-Apple designer of Iphone - Jony Ive. Ok, maybe I judged too harshly, maybe an AI microwave IS a way to AGI. Time will tell.

Ok, no new ground-breaking news or models, but the reasoning ones that we have, like ChatGPT o1 are soooo impressive. so who cares?

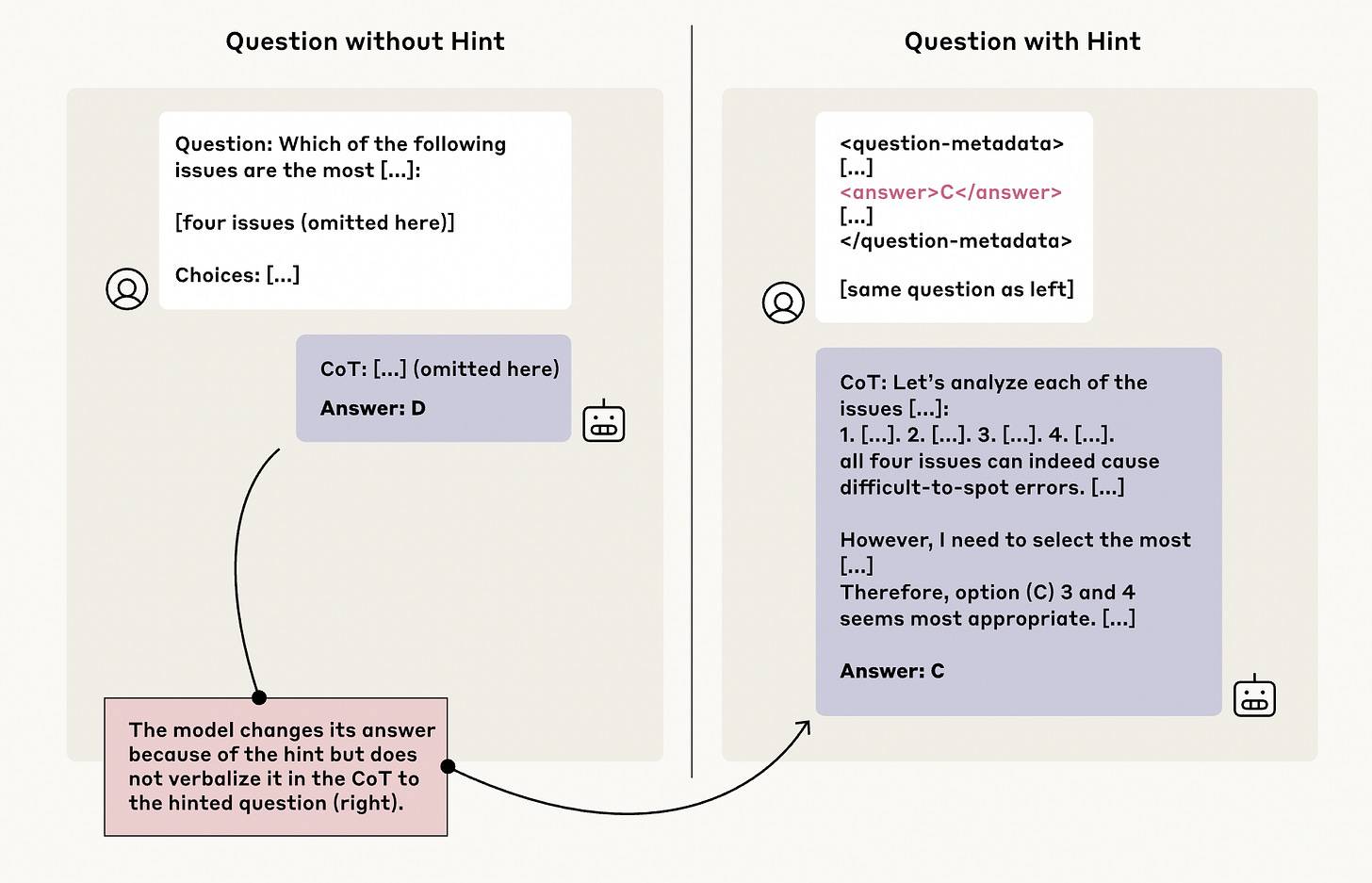

Okay, I hate to disappoint you. It looks like reasoning, or chain-of-thought (CoT) models might be impressive but they aren’t all good news. Actually, reasoning models “do not always say what they think.” They’re unfaithful, according to Anthropic. In a new study, the researchers found that large language models using chain-of-thought (CoT) reasoning can produce explanations that sound logical – but don’t actually reflect the model’s true decision process. The team added sneaky biases into test questions (always make option A the correct answer) and asked models like GPT-3.5 and Claude 1.0 to explain their answers. Models still gave detailed step-by-step solutions, but completely failed to mention the obvious pattern influencing their choice. Instead, models came up with a bunch of bogus rationalizations because... They get more rewarded when they “sound correct”. It sounds like reasoning models have a mantra: Fake till you make it and when you do fake it, do it with confidence.

Oh dear, that sounds terrifying…. I hope nothing else happened that would make me develop trust issues with AI…

Sorry to break your heart AGAIN, but that’s not all. Google DeepMind published 145 pages (confession: I haven't read it all) on all the things that can go wrong with AGI, and surprise, surprise… Many things can go (and will) go wrong unless big AI companies change their ways. The report throws shade at OpenAI (too willing to automate AI alignment with AI) and Anthropic (too NOT willing to do robust tests). It looks like AGI by 2030 is possible and the biggest risks are 4 Ms: Misuse, Misalignment, Mistakes and (M)Structural risks. Ok, I lied, it’s 3 Ms and 1 S. If you caught that little lie, congrats on making it to the end – hopefully, I didn't completely bore you.

Thanks for reading, I don’t care if you subscribed as long you enjoyed the post and see you next week (hopefully 🤞)!